Troubleshooting

I cannot save some or any settings, I get a Cannot communicate with server Unexpected token < in JSON error message

As of 2023-02-27, there is a bug in the YooTheme WidgetKit component that breaks 4SEO (and potentially more extensions from us and others) communication with your Joomla site.

Widgetkit 3.1.16 was released near March 17, 2023, and should contain a fix for this issue. Please refer to the YooTheme support forum thread where this is discussed for any further assistance from YooTheme.

That's not a problem in 4SEO and nothing can be done on our side to fix it.

We have identified the problem and multiple users have passed on the information to YooTheme. The YooTheme support forum thread where this is discussed is here.

Until YooTheme comes up with a fixed update, you can:

- disable widgetkit if you do not actually use it

or

- apply the following bug fix to WidgetKit:

1 - Open the file /administrator/components/com_widgetkit/vendor/yootheme/framework/src/Routing/Request.php at around line 67, you'll see:

$this->server = new ServerBag($server ?: $_SERVER);

$this->headers = new HeaderBag($this->server->getHeaders());

// decode json content type

if (stripos($this->headers->get('CONTENT_TYPE') ?: '', 'application/json') !== false) {

if ($json = json_decode(@file_get_contents('php://input'), true)) {

$this->request->add($json);

}

}

2 - Replace this section so that it looks like this:

$this->server = new ServerBag($server ?: $_SERVER);

$this->headers = new HeaderBag($this->server->getHeaders());

// decode json content type

// Fix by Weeblr

if (stripos($this->headers->get('CONTENT_TYPE') ?: '', 'application/json') !== false) {

if ($json = json_decode(@file_get_contents('php://input'), true)) {

$saferJson = empty($json) || !is_array($json)

? [$json]

: $json;

$this->request->add($saferJson);

}

}

// End fix by Weeblr

3 - After saving the modified file, 4SEO should work normally again

I cannot save some or any settings, I get a Cannot communicate with server Unexpected token < in JSON error message (JoomlaTools)

As of 2023-07-200, websites using any of the JoomlaTools extensions such as Docman or Logman will experience the same issues as described above for WidgetKit.

These extensions include JoomlaTools Koowa framework, usually as a Joomla system plugin. This framework unfortunately and incorrectly intercepts 4SEO communication with your server, but fails with a PHP error in doing so and therefore prevents 4SEO from operating.

JoomlaTools is aware of the problem but no fixed version has been release to our knowledge yet. We invite you to contact JoomlaTools directly to obtain a fixed version, or disable or uninstall these extensions when no other options exist.

Until JoomlaTools releases a fixed version, you can:

- disable that extension if not actively using it

or

- apply the following bug fix:

1 - Open the file /libraries/joomlatools/library/dispatcher/request/abstract.php at around line 211, you'll see:

$this->data->add($data);

2 - Replace this section so that it looks like this:

// Weeblr 2023-07-20

//$this->data->add($data);

$saferData = !is_array($data)

? [$data]

: $data;

$this->data->add($saferData);

// Weeblr 2023-07-20

3 - After saving the modified file, 4SEO should work normally again

I cannot save some or any settings

This is caused in most cases by a security system called mod_security running on your server. Depending on mod_security configuration (which changes from one hosting company to the next), requests made by 4SEO to save data to the server may be blocked.

If you run your own server, you can disable mod_security altogether, or study the log files to see wich rules are triggered and adjust them to allow 4SEO to work.

Otherwise, you'll need to talk to your hosting company so that they can do that for you.

The analysis stops immediately, no page is scanned, I am running Joomla on my local machine

This will usually happen if you run your local site under https. On a local webserver, this often implies using a self-signed certificates and this is something PHP will not accept. So each time 4SEO tries to load a page in the background to analyze it, this will fail with a 0 error code.

The fix is to open the Settings dialog on the Pages page, go to the Site analysis tab and make sure Validate TLS certificates is disabled.

After that, you can restart the analysis by:

- using the

Reset analysisbutton on the same Settings tab - Start the analysis with the

Analyze nowbutton.

The analysis stops immediately, no page is scanned (Reason #2)

Another reason (see above) for this is if your site is protected with a password. If so, 4SEO cannot load your site pages and cannot analyze them.

4SEO can still work with this if you use the common protection method of setting a username/password through your .htaccess file.

If you set up this username/password using your hosting company control panel, they also normally use the .htaccess method and 4SEO can work with it as well.

The fix is to open the Settings dialog on the Pages page and go to the Site analysis tab. Scroll down to the Restricted access section and enter your site's protection username and password in the corresponding input fields.

After that, you can restart the analysis by:

- using the

Reset analysisbutton on the same Settings tab - Start the analysis with the

Analyze nowbutton.

The analysis stops immediately, no page is scanned (Reason #3)

By default, 4SEO will apply all directives meant to prevent search engines to index your site. This includes:

robots.txtexclusionsnoindexmeta tags

This means any page that's excluded from being crawled by search engines will also not be analyzed by 4SEO.

If you want to analyze pages that are excluded from search engines, you can disable this feature in the Settings dialog on the Pages page:

Go to the Site analysis tab and set the following options to No:

Apply robots.txt exclusionsApply meta tag noindex exclusions

You can then restart the analysis by using the Reset analysis button on the same Settings tab.

The analysis stops immediately, no page is scanned (Reason #4)

When using a full page caching system, 4SEO is prevented from reading the content of your page (because the page is actually not built by your Joomla site where 4SEO lives but instead directly returned from the cache). Full page caching solutions include:

- Cloudflare or other CDN when configured to cache the entire page HTML

- Some 3rd-party Joomla caching plugins

- Some server-level caching system such as Varnish for instance

If you use one of these, or a similar solution, you should enable 4SEO cache-bypass feature:

- Open the

Settingsdialog on thePagespage - Go to the

Site analysistab. - Scroll down to the

External cache bypass (CDN)option and enable it

This has no effect on your site caching

4SEO external cache bypass only applies to 4SEO itself, when it crawls your site. Your site is still cached as expected for your regular visitors or search engines.

Cloudflare

What's described above applies to when configuring Cloudflare to cache all pages content. If you use Cloudflare CDN as 99% of us, that is to cache images, javascript files or CSS files, then this does not affect 4SEO site analysis, and you do not need to do anything.

The analysis stops immediately, no page is scanned (Reason #5)

If you run your site on a Private server or a dedicated server behind a caching layer (Varnish for instance) or any sort of proxy, on rare occasions a misconfiguration of your server DNS can prevent 4SEO to analyze your site.

This happens if your server DNS is unable to resolve your website hostname. A sign this is happening is if it takes a long time (30 seconds usually) for 4SEO to start crawling the 1st page and then it fails without any pages being scanned.

The fix here is that the server DNS configuration must be fixed to allow resolving your website hostname from the server itself.

The analysis stops immediately, no page is scanned (Reason #6)

You are using Cloudflare Bot Fight Mode. Cloudflare Bot Fight Mode tries to detect robots crawling sites to spam or attack them. If it thinks a request is from a robot, this feature will block the request.

Unfortunately, 4SEO is considered a robot by Cloudflare and will be blocked by this feature. Cloudflare does not offer any configuration or any way to tell that 4SEO is a valid, acceptable crawler, so the only option is to disable this feature on your Cloudflare dashboard, under the Security menu header.

How long will the analysis take?

As usual, it depends. The most important thing for us is that the analysis process does not slow down your site when pages are displayed to visitors. Analysis is done in the background, trying to find a balance between doing the analysis quickly and not slowing down the site. But not slowing down the site always has the highest priority.

2 things affects analysis duration:

- how large the site is

- how much traffic (visitors) are visiting your site

See a more detailed explanation on how background analysis works on the Pages documentation.

On large sites, with many tens of thousands pages or more, the analysis will take longer. If a site has a lot of visitors, analysis will be much quicker. If you have only a few visitors a day, then the analysis will be much slower.

Full site analysis can take anywhere from a few seconds to several days.

Here are few things to consider:

- You can run the analysis manually from 4SEO control panel, on the

Pagespage, using theAnalyze nowtoolbar button. - You may instruct 4SEO to skip some parts of your site if they do not have any SEO value. For instance a forum, which has many pages but few with actual content. Use the

Excluded pagesoption in theSettingsof thePagespage for that. - Many 4SEO features will work without the analysis being complete: Social networks sharing, structured data generation, redirections, content replacement,...

Where is my sitemap?

4SEO generates a single sitemap at the address https://<yoursite>/sitemap-4seo.xml

Why do I get a "Service unavailable" error trying to load the sitemap?

A sitemap should only be generated when a site has been fully analyzed because that's the only way to find out which pages should be in the sitemap and which one should be discarded (duplicates typically).

Until the site analysis has been completed, if you or Google request the sitemap, 4SEO will respond with the 503 Service unavailable message, which is what search engines expect when a page is being worked on and not ready at the moment.

The 503 response code tells vistor: this page is not ready now, but it will be soon so just come back later.

This response is normal and expected by search engines.

Why is there no page listed in the sitemap file itself?

If you open the file https://<yoursite>/sitemap-4seo.xml, you won't see a list of pages addresses as you may be used to.

4SEO uses an index sitemap file format: the sitemap-4seo.xml file does not contain all URLs directly but instead a list of other, partial sitemaps.

This allows handling very large websites, with hundreds of thousands of pages without any issue. It works just fine as well for much smaller sites, with only a few pages.

4SEO will only put up to 1000 pages in each partial sitemap file. This makes each file small, fast and easy to manipulate and update.

Search engines such as Google or Bing will read first the index sitemap file at https://<yoursite>/sitemap-4seo.xml and then each partial sitemap they found listed there.

You can follow the progress of search engines reading your sitemap on the Sitemaps pages in 4SEO admin panel.

On multilingual sites, pages in each language will be put in separate partial sitemaps.

Trying to read sitemap triggers a 404 error

4SEO sitemap, located at https://yoursite.com/sitemap-4seo.xml, is not a real file, sitting on your web server disk. Instead, it's rendered by Joomla just like any other page of your website.

However, some 3rd-party extensions, or your server .htaccess file, can sometimes block .xml or .txt files. If that happens, a 404 error page generated by your webserver will be displayed when trying to access your sitemap.

Make sure to configure your .htaccess file correctly to leave access to .xml files. Likewise, configure 3rd-party extension such as Admin Tools to allow 4SEO sitemap files to be read by search engines normally. See below for what seems to be a proper Admin Tools configuration. We'd still recommend consulting with them about the proper configuration.

Admin Tools incorrectly blocks my 4SEO sitemap

With default configuration, Admin Tools by Akeeba blocks 4SEO sitemap access, causing a 404 Page not found error when you, or search engines, try to access it.

We have found the following configuration of Admin Tools to solve the problem. We encourage you to contact them to be sure this is a proper and permanent fix.

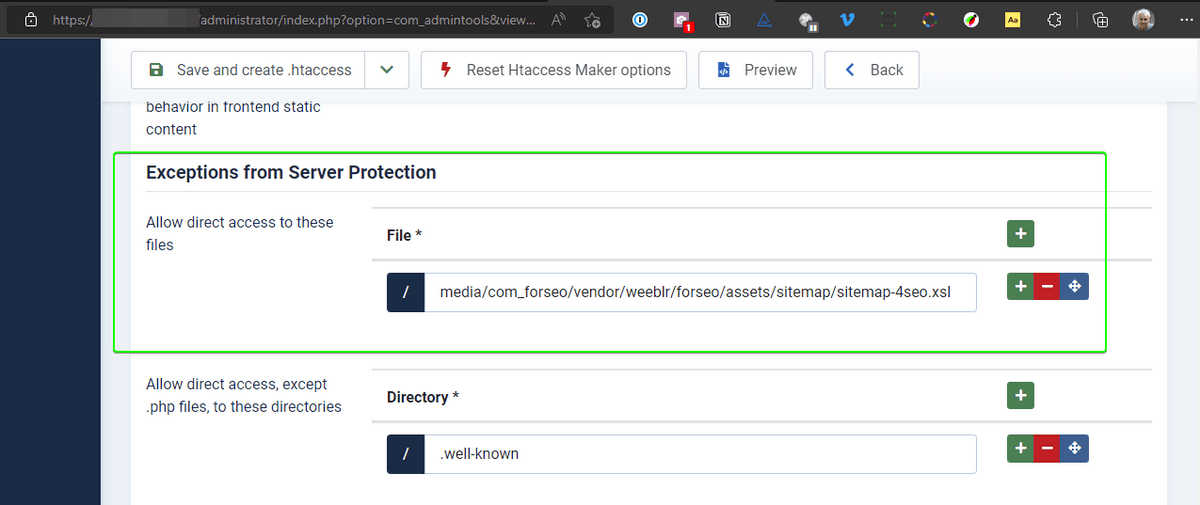

-

Go to the

.htaccess Makerfeature from the Admin Tools control panel -

In the

Exceptions from Server Protectionsection, add a record for the filemedia/com_forseo/vendor/weeblr/forseo/assets/sitemap/sitemap-4seo.xsl

It should look similar to this:

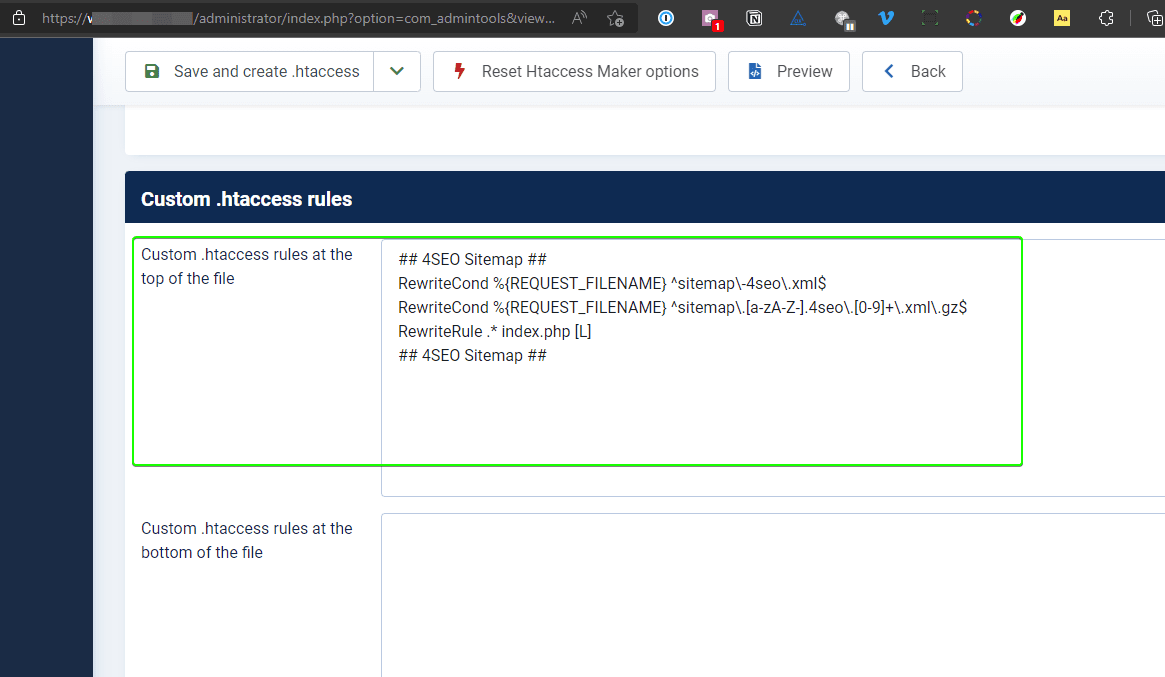

- In the

Custom .htaccess rules at the top of the file, paste the following:

## 4SEO Sitemap ##

RewriteCond %{REQUEST_FILENAME} ^sitemap\-4seo\.xml$

RewriteCond %{REQUEST_FILENAME} ^sitemap\.[a-zA-Z-].4seo\.[0-9]+\.xml\.gz$

RewriteRule .* index.php [L]

## 4SEO Sitemap ##

It should look like this:

- Press the

Save and create .htaccessbutton in the toolbar so that the changes are applied.

I cannot connect 4SEO to my Google Search Console

And the process fails with the following error message:

Could not send authorization code to https://yourwebsitename.com

If you have a Cloudflare Pro account, some Cloudflare security features can block the connection between 4SEO and Google Search Console.

To allow a proper connection to the Search Console, a user has reported that the following configuration works:

- white-list the IP address

45.33.72.49in the Cloudflare Firewall - Make sure the cache level is set to

Standard - Switch

Definitely automated botstoAllow