Most site have a robots.txt file that tells search engines which part of a website it's OK to explore and index, and which parts they should stay away from.

4SEO includes a simple editor to let you change the content of your robots.txt file, but also an optimizer which can make (small) changes to the default Joomla robots.txt file to improve SEO results

The robots.txt file is also used to signal to search engines that you have an XML sitemap. This is done by adding a specific line to the robots.txt file and is handled automatically by 4SEO if you enable XML sitemap handling.

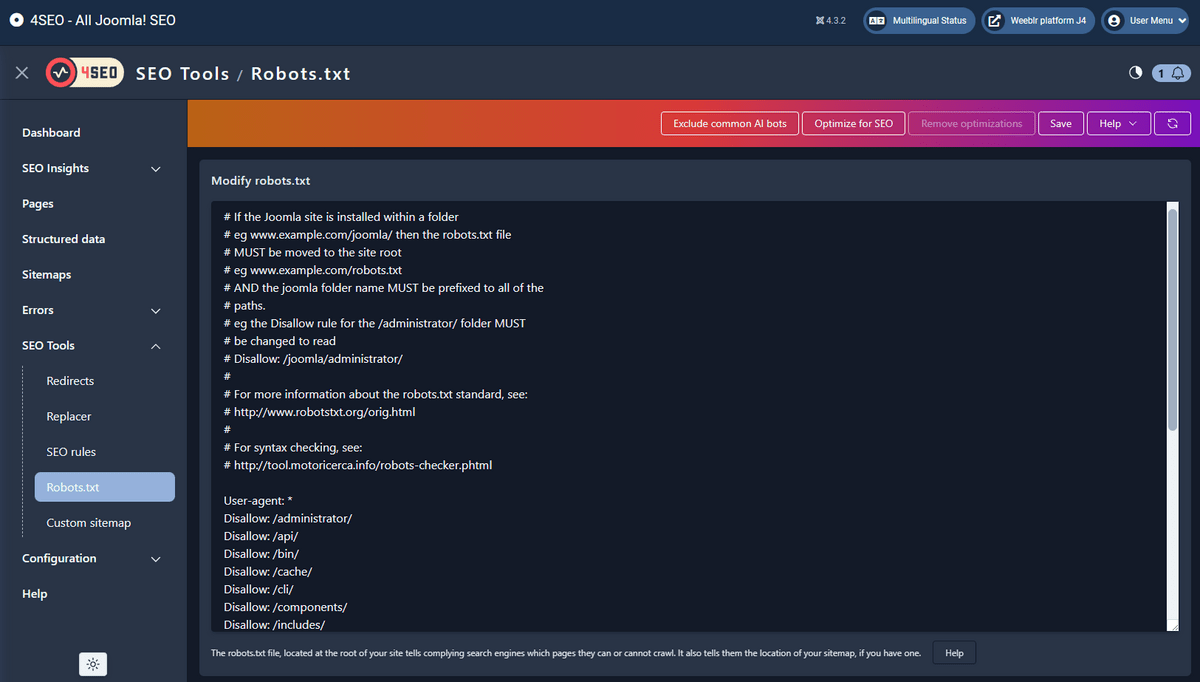

Robots.txt file edition

Visiting the Robots.txt menu item will show you the content of your robots.txt file, if you have one (if you don't, the editor will be empty and you can just type in your desired content).

After making any change, press the Save button in the toolbar. The toolbar itself has only 2 actions: Optimize for SEO and Remove optimizations

Exclude common AI bots

AI companies such as OpenAI, makers of ChatGPT, or Google with Bard, have robots that crawl websites to harvest content used in training their AI systems.

This may be good or bad for you, depending on whether you want your content to be known and used by AI systems such as ChatGPT - which won't link back or send traffic to your site, or if you prefer to be in control of what access and use your content.

You can block common AI robots on the Robots.txt page using the Exclude common AI bots button. This will cause a few lines to be added to your robots.txt file to tell compliant crawlers that you don't allow them to use your website content.

Currently, 4SEO blocks 2 robots from from OpenAI and one from Google:

GPTBot: the OpenAI robots that's crawling the web to provide information to train future versions of ChatGPTChatGPT-User: the crawler used by ChatGPT plugins, which can fetch information from a website to respond to a specific user questionGoogle-Extended: a crawler used by Google to get data to train Bard and Vertex AI (their AI platform to build AI applications)

4SEO blocks the entire website for each of these crawlers. If you need more fine-grained control, or only want to block one but not the other, you can directly modify your robots.txt from within 4SEO as needed.

- These instructions are for future crawls by OpenAI robots. If ChatGPT already knows your content, and it's been trained on it, it's too late, you cannot make it "forget" or "unlearn" your content

- Other AI companies may have other crawlers, and they likely won't respect these instructions.

Optimize for SEO

Pressing this button will cause 4SEO to modify the robots.txt file content for better SEO results. Namely, it will allow search engines to crawl more folders than what the default Joomla robots.txt allows.

This is extremely important as it's quite common to have CSS and javascript files in folders blocked by Joomla. Without access to your site Javascript and CSS, search engines cannot decide if it is, for instance, mobile-compatible or properly evaluate its speed, understand its content and more.

4SEO will not automatically save the changes it makes, to let you validate them first. Be sure to press the Save button after optimizing.

Any change made to the file content by 4SEO is clearly marked as so with comments.

Remove optimization

Clicking this button will cause 4SEO to revert any change it made to the original content.