Sitemaps

4SEO can create, update and submit to search engines an XML sitemap entirely automatically. You can also manually add or remove pages from the sitemap to your liking.

Sitemaps are used by search engines for 2 mains reasons:

- to discover pages that are not naturally linked from other parts of your site

- to help in deciding which page is the canonical one in case the same content can be accessed through multiple URLs

For larger sites, they can also help in deciding which pages will or will not be crawled, or faster, by prioritizing canonical pages.

As 4SEO crawls your entire site, it can easily build an exhaustive list of pages of your site. But if we were simply putting all those pages in the sitemap, this would be a terrible job from an SEO standpoint.

So 4SEO pays a lot of attention to only including the right pages in your XML sitemap, for the best SEO benefit.

Sitemaps should nearly never contain all pages on a site

Only important pages, with SEO values should be listed. Likewise, if some pages can be accessed through multiple URLs, only the one single canonical URL should be listed.

And of course, pages with noindex meta tags or that are excluded through a robots.txt directive should never be included.

In the same way, a sitemap made up of only links from your menus is useless: they are all discovered immediately when the home page is loaded and do not add information about canonicalization.

Sitemaps generation

4SEO will generate a sitemap automatically once a site analysis has completed. This cannot happen earlier as 4SEO must crawl the entire site to identify canonical URLs, and also perform checks such as:

- presence of

noindexmeta tag - exclusion of a page with a

robots.txt

Once a sitemap is ready, it is automatically published. However, 4SEO also constantly monitors the site for changes and addition of new content.

When such a change or addition is detected, the new or modified page is analyzed again. New pages are added to the sitemap file if they comply with all requirements. Modified pages are checked again and may be added (if they previously were excluded) or removed (if they don't match the requirements anymore) from the sitemap.

Smaller sites, with less than 500 pages, basically do not need a sitemap, at least per Google's documentation. They are small enough that search engines will crawl them entirely anyway.

However, even in that case an SEO-oriented sitemap can be useful if it properly indicates canonical and important pages only.

Sitemaps status

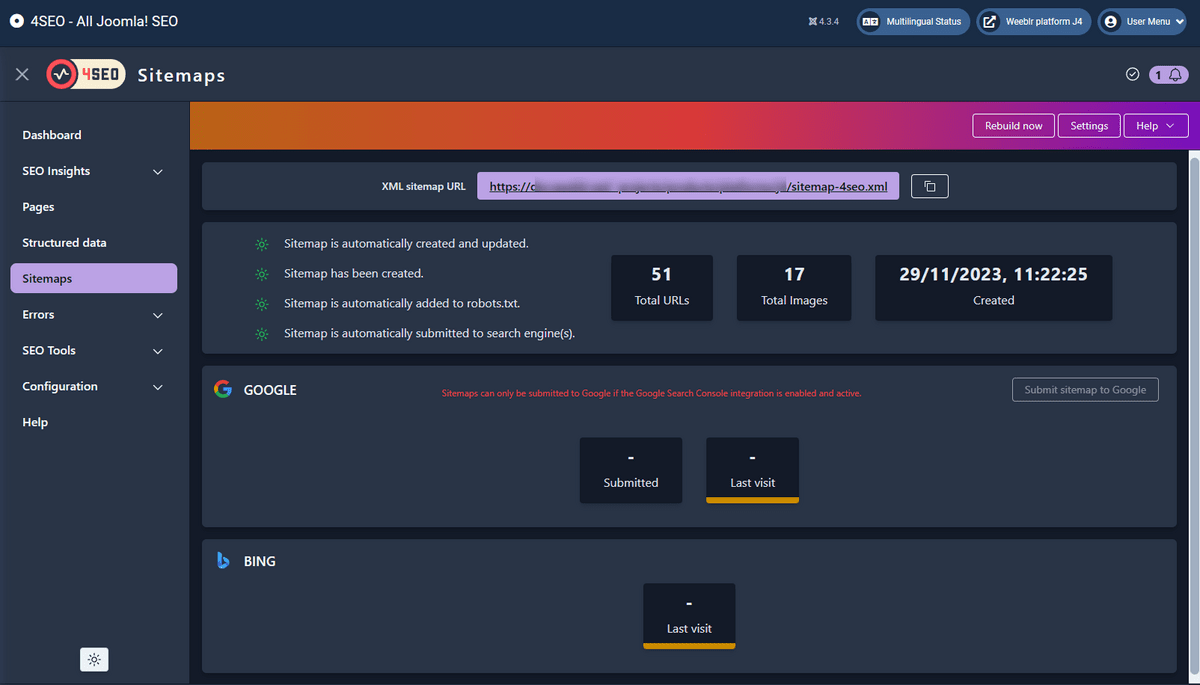

The Sitemaps page has a dashboard that tells you all about the sitemap status:

Note that the Last visit recording is not always accurate and 4SEO may definitely miss some Google bots visits.

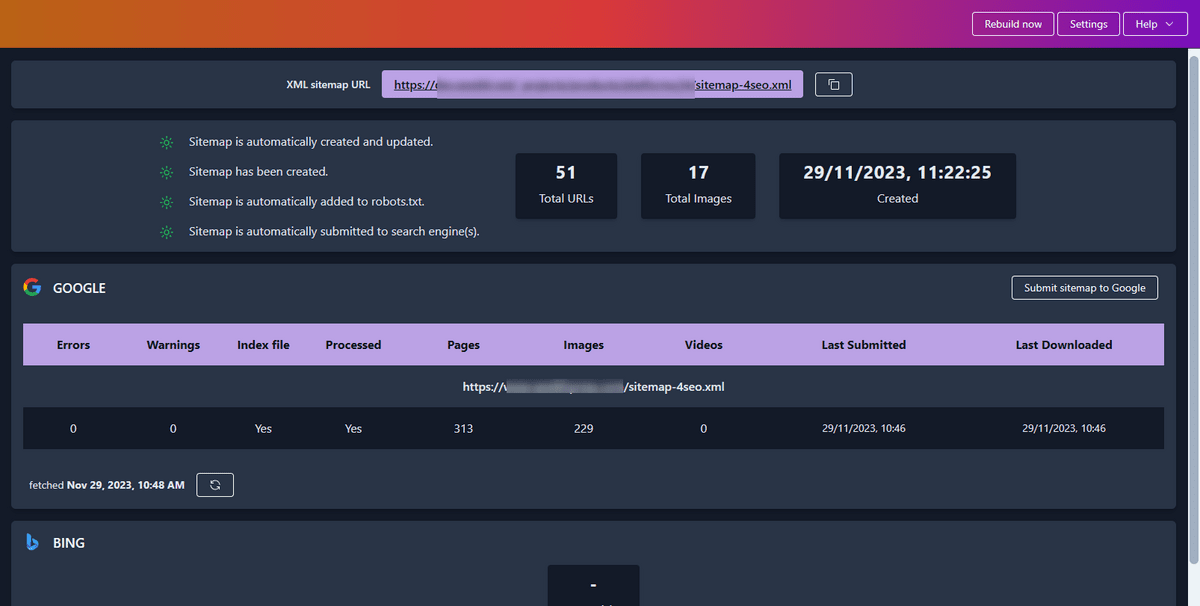

If you connect 4SEO to your Google Search Console account however, it can then read the data Google has about all of your website sitemaps, including how many URLs where discovered in these sitemaps.

Here is an example:

You can use the Submit sitemap to Google button to make 4SEO send the current sitemap to Google.

4SEO does that automatically whenever your sitemap is created, or has been updated. But submitting yourself can be useful if you created or modified manually custom sitemaps.

Images sitemap

4SEO also generates an image sitemap for your site. Images detection can be controlled in the sitemaps settings.

Images are listed inside of the main sitemap file, so there's no specific image sitemap file.

As with the main content sitemap, 4SEO tries to only includes relevant and useful images in your sitemap. For instance, it will by default only include images wider than 200 pixels.

You can configure this option and several others to fine-tune which images are included and which ones are not using the corresponding settings section.

4SEO currently only search for images in content displayed using a img HTML element. This may cause images displayed through javascript as is often the case with Image Gallery extensions to be only picked up at lower dimensions.

Sitemaps blocking by .htaccess or 3rd-party extensions

4SEO sitemap, located at https://yoursite.com/sitemap-4seo.xml, is not a real file, sitting on your web server disk. Instead, it's rendered by Joomla just like any other page of your website.

However, some 3rd-party extensions, or your server .htaccess file, can sometimes block .xml or .txt files. If that happens, a 404 error page generated by your webserver will be displayed when trying to access your sitemap.

Make sure to configure your .htaccess file correctly to leave access to .xml files. Likewise, configure 3rd-party extension such as Admin Tools to allow 4SEO sitemap files to be read by search engines normally.

Manually adding or removing pages from sitemaps

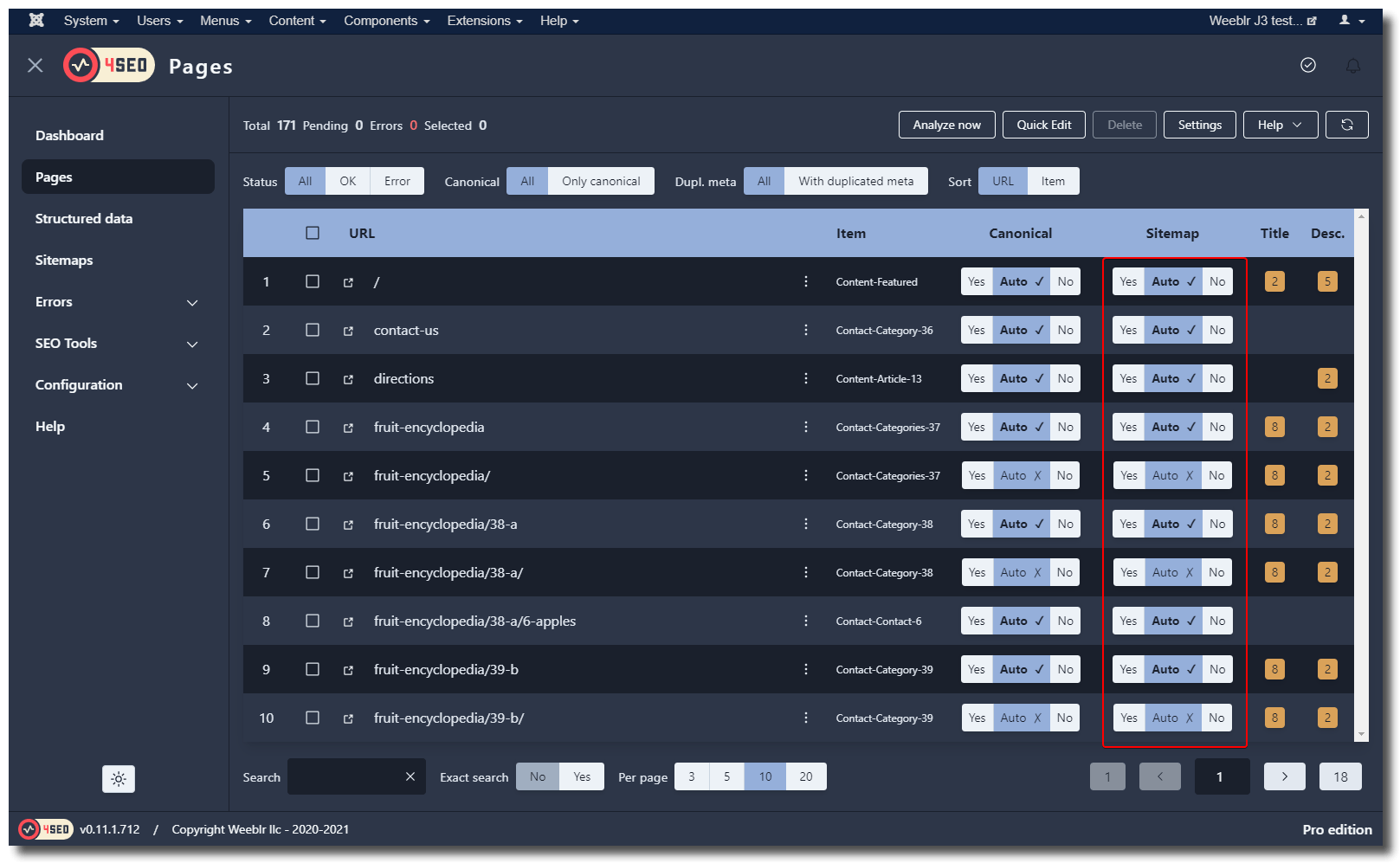

While the sitemap process creation is entirely automatic, including selecting canonical URLs and checking robots.txt file exclusion, you can manually remove or add back individual pages as you see fit.

Go to the Pages page and use the Sitemap column to exclude/include pages:

Adding pages to sitemap with a custom file

In some rare cases, you may want to add to your sitemap pages or assets that 4SEO has not discovered or found useful in terms of SEO. You can easily do this by listing them in a file located at the root of your site.

You can create one or both of these files:

sitemap-4seo-custom.txtsitemap-4seo-custom.xml

If one or both of these files are found at the root of your site when 4SEO creates or updates your sitemap, they will be included for search engines to read them.

Note that if your sitemap has already been built, you'll need to use the Rebuild now button on the Sitemaps page toolbar to cause 4SEO to take into account the newly added custom sitemap(s).

sitemap-4seo-custom.txt

This file is a simple text file where you can only list pages addresses. Do not list images or videos in this file, search engines will only accept website pages when using a text file.

Just list the desired pages addresses, one per line:

https://example.com/some/url-1

https://example.com/some/url-2

https://example.com/some/url-3.html

Use only fully-qualified addresses, including the domain name: https://example.com for instance

Use the Tools | Custom sitemap menu item to directly edit this file from within 4SEO.

sitemap-4seo-custom.xml

This file is a regular sitemap. You can list there pages addresses as well as images or videos. However, you must use a valid sitemap format for it.

- Regular sitemap example

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>https://example.com/some/url-1</loc>

</url>

<url>

<loc>https://example.com/some/url-2</loc>

<lastmod>2021-01-20T16:45:08Z</lastmod>

</url>

</urlset>

- Example with images

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9" xmlns:image="http://www.google.com/schemas/sitemap-image/1.1">

<url>

<loc>https://example.com/some/url-1</loc>

</url>

<url>

<loc>https://example.com/some/url-2</loc>

<image:image>

<image:loc>https://example.com/images/logo.svg</image:loc>

</image:image>

<image:image>

<image:loc>https://example.com/images/product.jpg</image:loc>

<image:caption>A wonderful product</image:caption>

</image:image>

</url>

</urlset>

Actual and valid sitemaps are tedious to build by hand. You may find online sitemap generators which can help you create the custom sitemap content, which you can then save or copy/paste into 4SEO custom sitemap file.

Sitemaps publication

Once a sitemap has been created, or each time it's updated (pages added or removed), 4SEO will publish it.

There are 2 ways your site can advertise the presence of a sitemap file:

- through your

robots.txtfile - by directly submitting it to Google

4SEO can do both, unless you tell it otherwise using the Settings toolbar button of the Sitemaps page. See the Settings page for more details.

4SEO will automatically submit your sitemap when it's ready to Google. You can of course also submit it manually using the search engines search consoles, although it's not useful.

If you manually submit the sitemap however, be sure to wait until the sitemap is ready. Whether the sitemap is ready is displayed on the 4SEO Sitemaps page.

Submitting your sitemap directly to Google requires that 4SEO is connected to your Google Search Console account.

If you submit your sitemap to search engines yourself, the address to submit is https://www.yoursite.com/sitemap-4seo.xml. You can copy this URL from the Sitemaps page of 4SEO admin.

Do not submit any other sitemap address than this one.

Specifically, DO NOT SUBMIT the 4SEO sitemaps "sub-files" with names similar to https://www.yoursite.com/sitemap.en-GB.4seo.1.xml.

These files should not be submitted directly, they are only part of the main index sitemap.

IndexNow

IndexNow is a mechanism used by several search engines to be informed of pages that have just been modified on your site. You can think about it as a compliment to your sitemap:

- valuable pages are listed in your sitemap

- if one of them is modified, use IndexNow to inform participating search engines of the change

Originally a Microsoft Bing initiative, the following search engines participate in IndexNow as of the start of 2023:

- Microsoft Bing (and Yahoo as a consequence, Yahoo being powered by Bing)

- Yandex

- Seznam.cz

Google does not use IndexNow. Although they announced the beginning of an IndexNow testing process mid-2021, nothing came out of it at the time of writing, 18 months later. Google become a participant is not generally considered likely.

You can submit a page to IndexNow just after you made significant changes to it. All participating search engines are automatically informed of your submission. Each search engines then may come back to load the page and update their data.

This may increase the speed at which your website changes are indexed by search engines.

Do not submit existing pages that have not just been modified. IndexNow recommends to use your sitemap for these pages and 4SEO already handles your sitemap automatically.