Hello Yannick

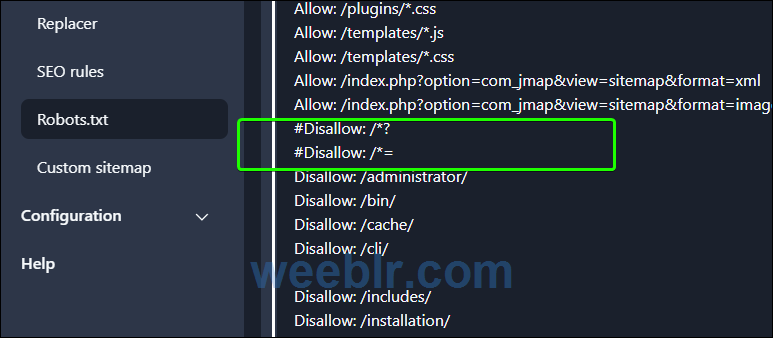

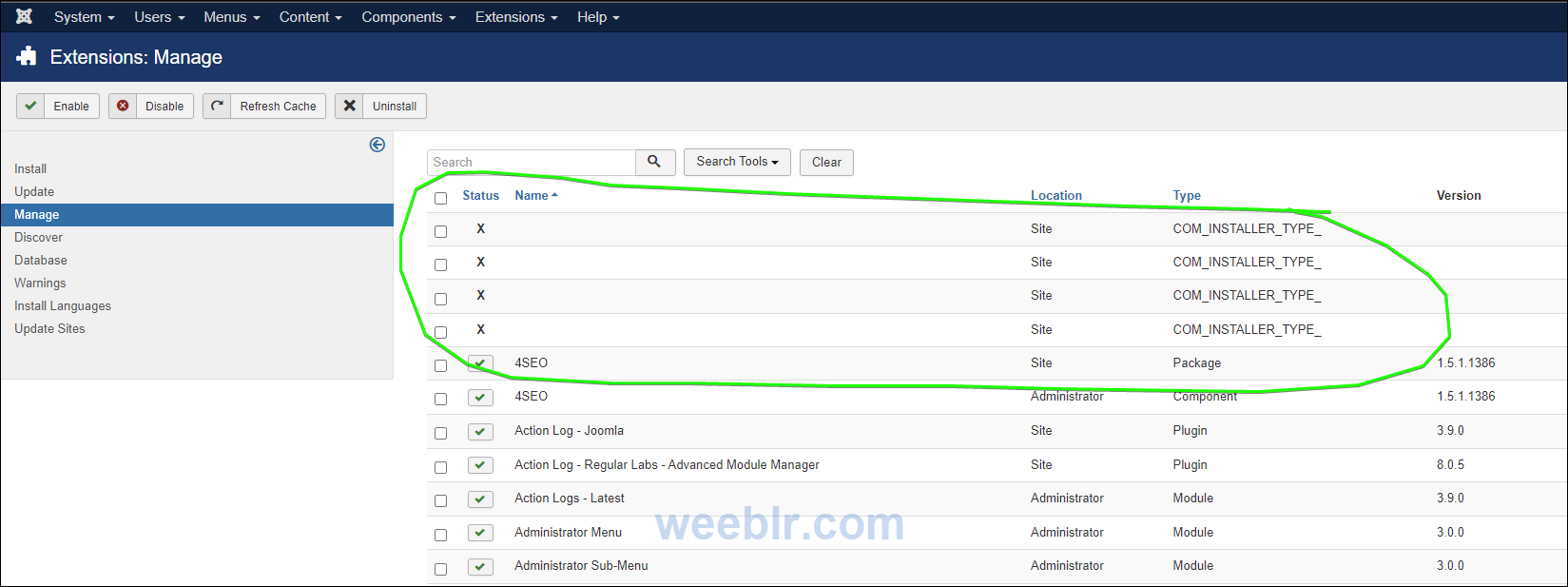

I have just installed 4seo on my site www.example.com and I have fallen at the first hurdle. The site analysis is stuck at 0% and I am getting a 403 error on the homepage. I think that this might have something to do with a rule in the robots.txt file. From memory my host company put in a number of lines in the robots.txt a few years ago because I was getting a lot of bots visiting the site and eating up the resources. I used to use Jsitemap on this site to create a sitemap, but it is not playing nicely with a component I use called JomHoliday. So that is why I have switched to 4SEO (plus it makes sense given I have used sh404sef for years).

Site details & login

https://www.example.com/administrator/

user: [redacted]

pass: [redacted]

Thanks

Iain Laverock