Hello,

we have the 4seo brand new and have only 1 pages after the "analyze now" is "completed"?! According to sh404SEF we have well 1950 URLs (1433 visited).

what is going wrong here?

Helpdesk is open from Monday through Friday CET

Hello,

we have the 4seo brand new and have only 1 pages after the "analyze now" is "completed"?! According to sh404SEF we have well 1950 URLs (1433 visited).

what is going wrong here?

Hi

what is going wrong here?

Of course can't say anything without much more details, starting with the full and real URL of the site and:

- Joomla version

- type of content on the site? Joomla content, other extensions?

- content of robots.txt (I can check when I have the site URL)

- whether full page caching (Cloudflare, other CDN, or server level for instance) is used.

Superadmin credentials would likely be useful to check how 4SEO was configured.

Best regards

Yannick Gaultier

weeblr.com / @weeblr

Hello Mr Gaultier,

I think with the backend access it is more simple. The website here is still under development (near final). We want to check with your tool especially seo & broken links.

Later we would need some real help for the "Structured data".

https://[redacted].de/administrator/index.php?4sicht

Login: [redacted] | [redacted]

Hi

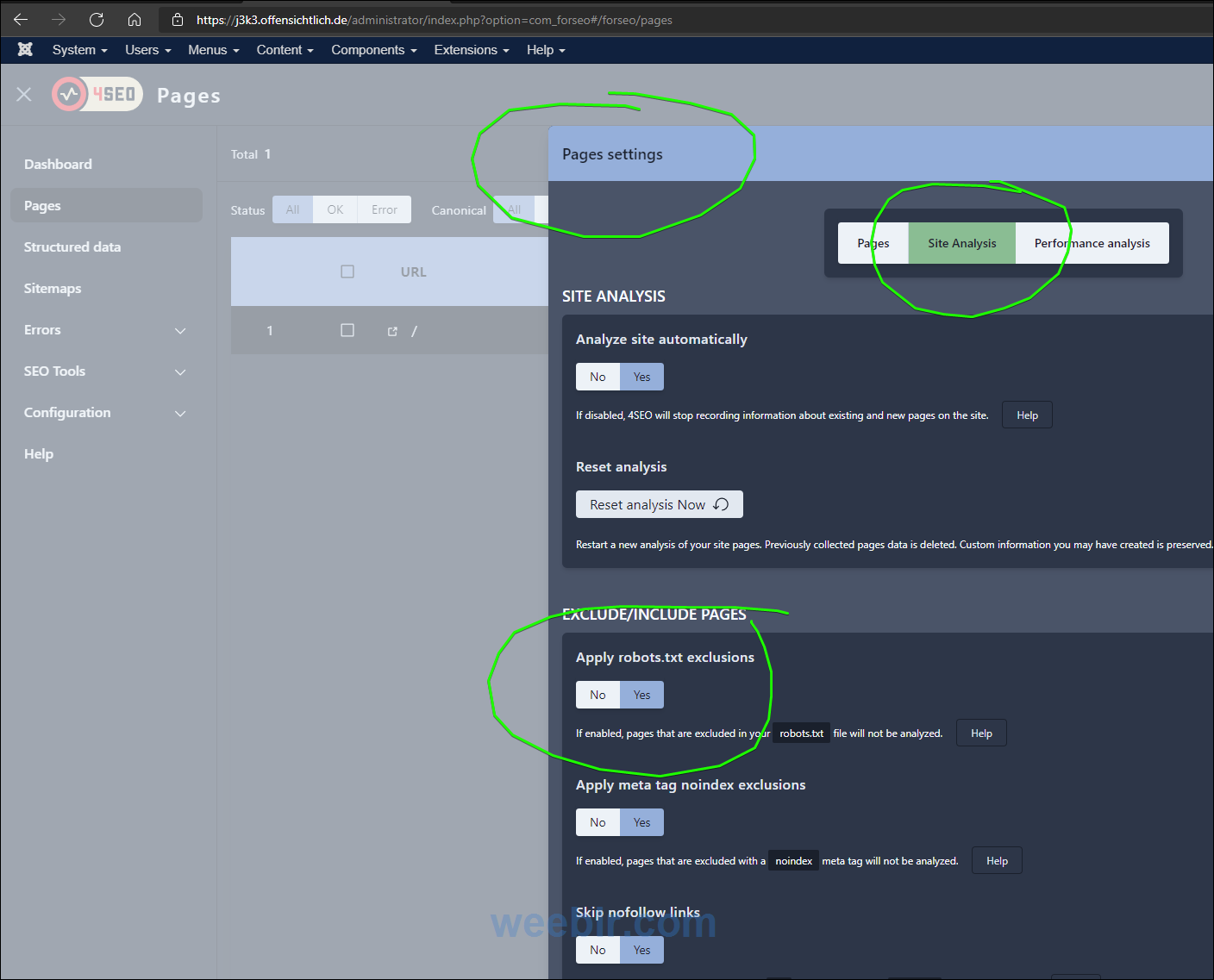

Problem is due to your robots.txt. You have excluded your entire site from crawling through your robots.txt. However 4SEO complies with robots.txt directives and so after loading the home page, it stops crawling the site as all links are blocked.

You should either:

- open the site

- or disable 4SEO compliance with robots.txt:

IMPORTANT: If you disable robots.txt compliance, you should enable it back when you open the site to public and remove the block in robots.

If not, 4SEO may crawl some unwanted pages.

Later we would need some real help for the "Structured data".

What do you mean?

Best regards

Yannick Gaultier

weeblr.com / @weeblr

Hmm, I disable 4SEO compliance with robots.txt but the analyse is also now 1 page?

PS: at the moment we do not want to open the robots.txt, because google should not index the development page of course.

Hi

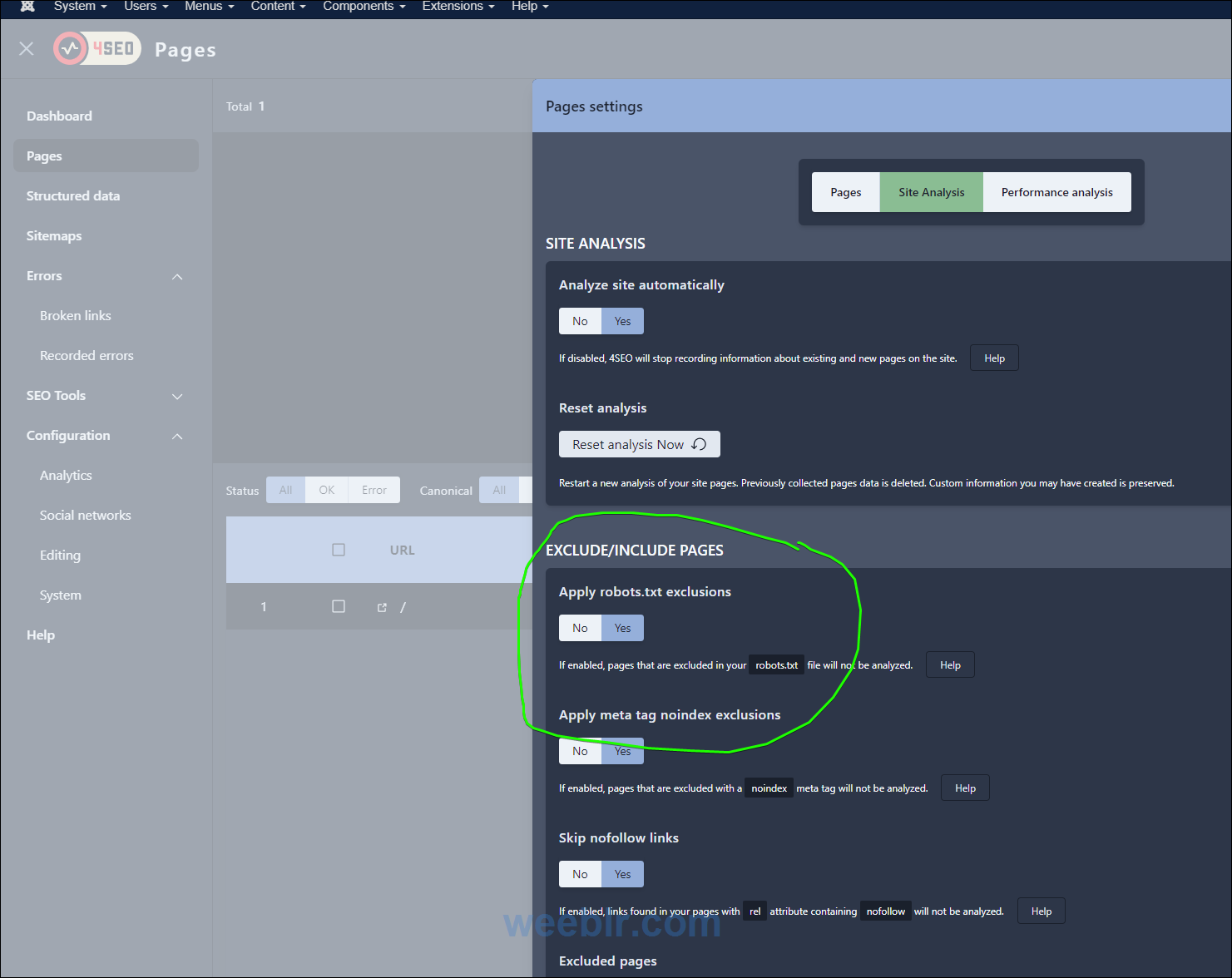

"Apply Robots.txt" is still set to Yes:

Did you reset it?

Best regards

Yannick Gaultier

weeblr.com / @weeblr

I would say - yes

Hi

OK, I am very confused now. Your must change that setting. If you don't change it, 4SEO will continue to apply robots.txt exclusions.

Set it to NO so that robots.txt is not applied anymore.

And to be sure, after disabling "Apply robots.txt", also use the "Reset analysis now" to clear up everything, just in case.

Best regards

Yannick Gaultier

weeblr.com / @weeblr

Okayyyy :)

The last tip was crucial!

one last question.

Can you tell me where the changed descriptions are saved? IN the DB of 4SEO? or can they also be found in sh404SEF?

Hi

The last tip was crucial!

Yes, sorry, when that setting is enabled, robots.txt is applied, when it's off, robots.txt is not applied.

Can you tell me where the changed descriptions are saved? IN the DB of 4SEO? or can they also be found in sh404SEF?

4SEO data is saved within 4SEO database tables. 4SEO is not tied to sh404SEF. They can work together or separately (eg 4SEO can do all its work with just Joomla SEF URLs, no need for sh404SEF).

If you already have meta data in sh404SEF, they will keep working as expected and there's nothing to do. You can decide to use sh404SEF or 4SEO in the future to work on meta data based on your preference.

Structured data , sitemaps and other features are only available in 4SEO though.

Best regards

Yannick Gaultier

weeblr.com / @weeblr